Statistical Policing¶

Goals¶

In this example we combine a sliding window rate meter with a probabilistic packet dropper to achieve a simple statistical policing.

4.4The Model¶

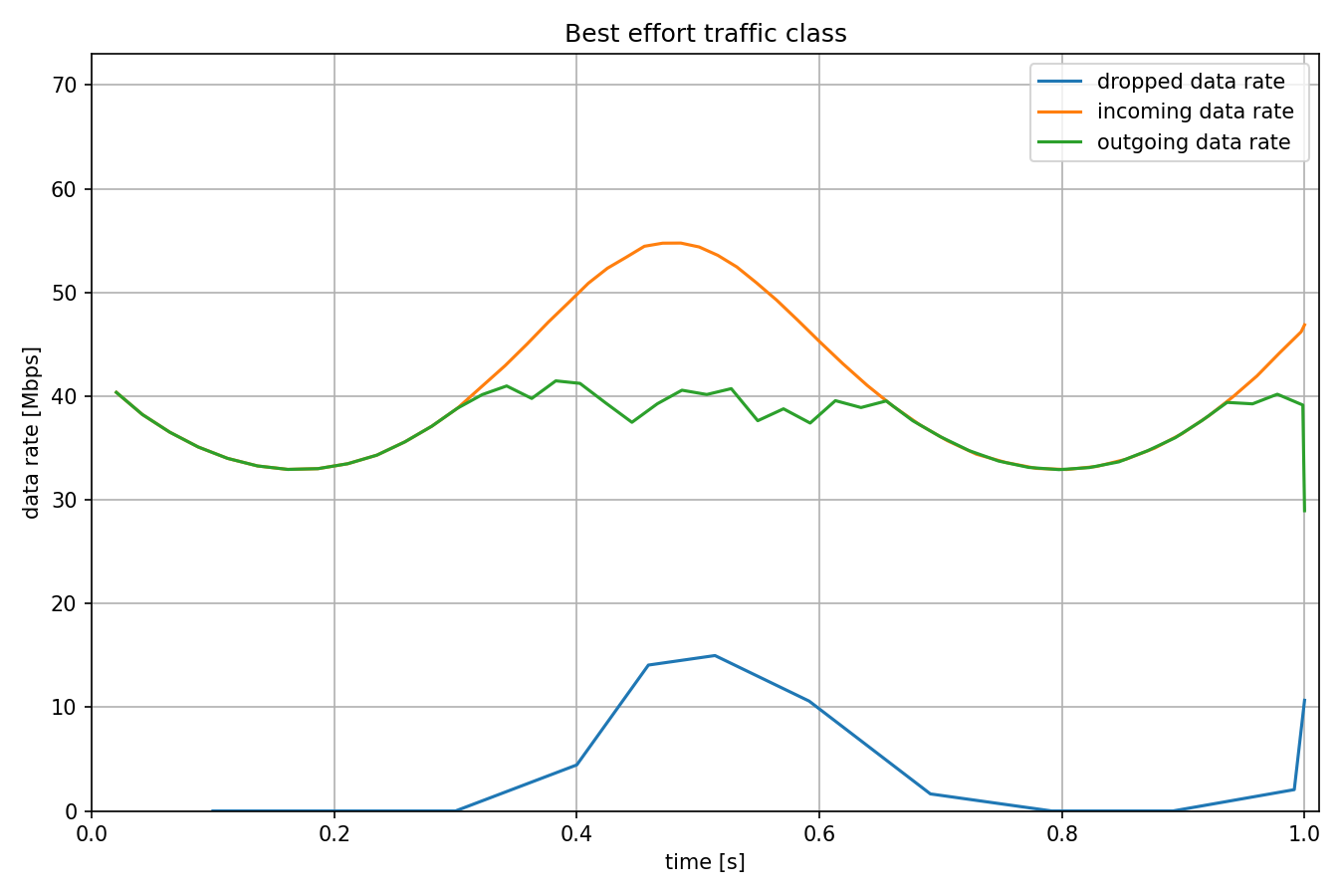

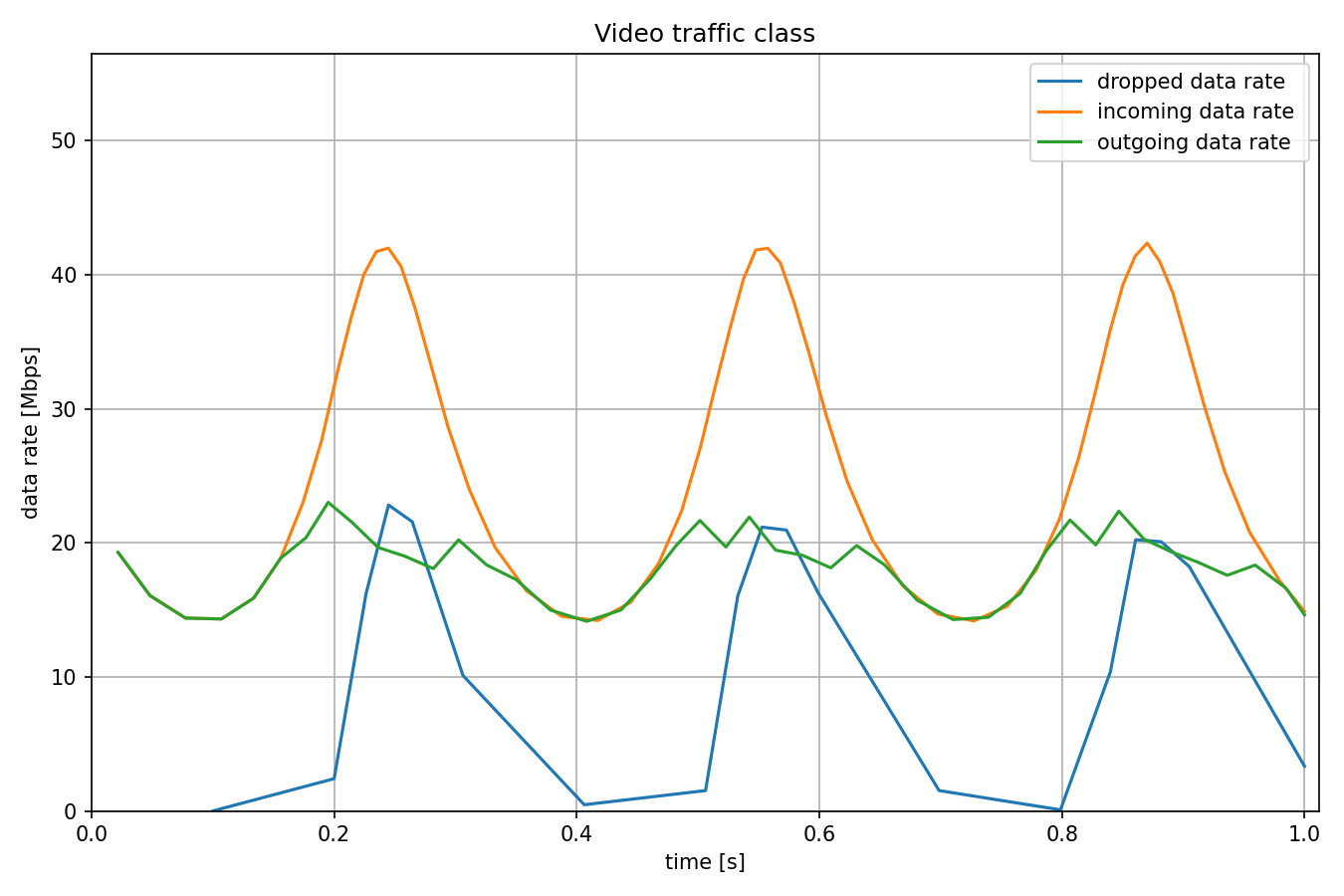

In this configuration we use a sliding window rate meter in combination with a statistical rate limiter. The former measures the thruput by summing up the packet bytes over the time window, the latter drops packets in a probabilistic way by comparing the measured datarate to the maximum allowed datarate.

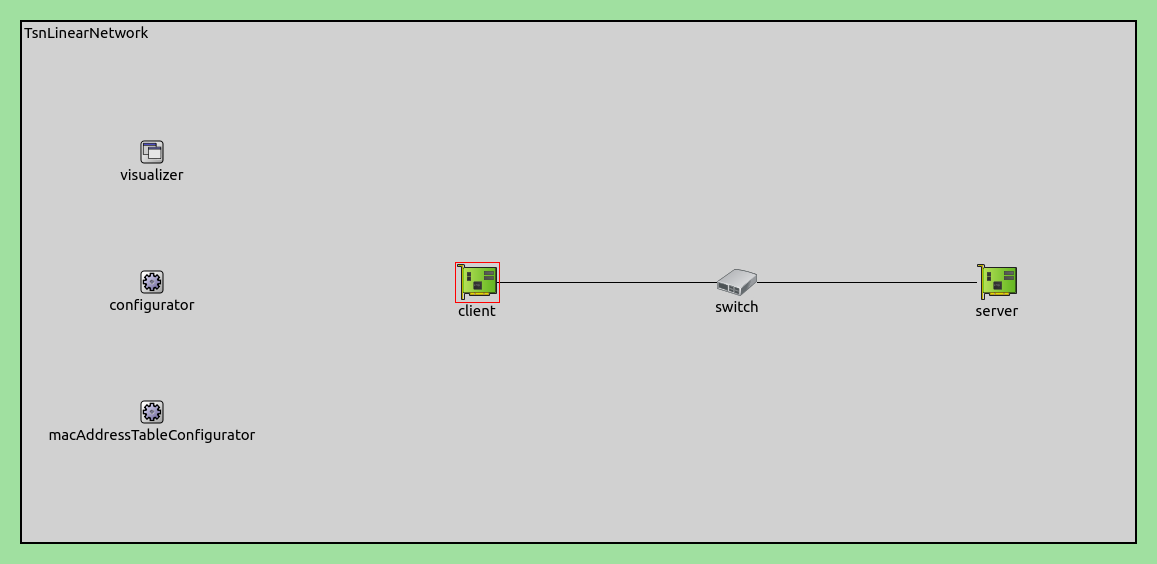

Here is the network:

Here is the configuration:

[General]

network = inet.networks.tsn.TsnLinearNetwork

sim-time-limit = 1s

description = "Per-stream filtering using sliding window rate metering and statistical rate limiting"

# client applications

*.client.numApps = 2

*.client.app[*].typename = "UdpSourceApp"

*.client.app[0].display-name = "best effort"

*.client.app[1].display-name = "video"

*.client.app[*].io.destAddress = "server"

*.client.app[0].io.destPort = 1000

*.client.app[1].io.destPort = 1001

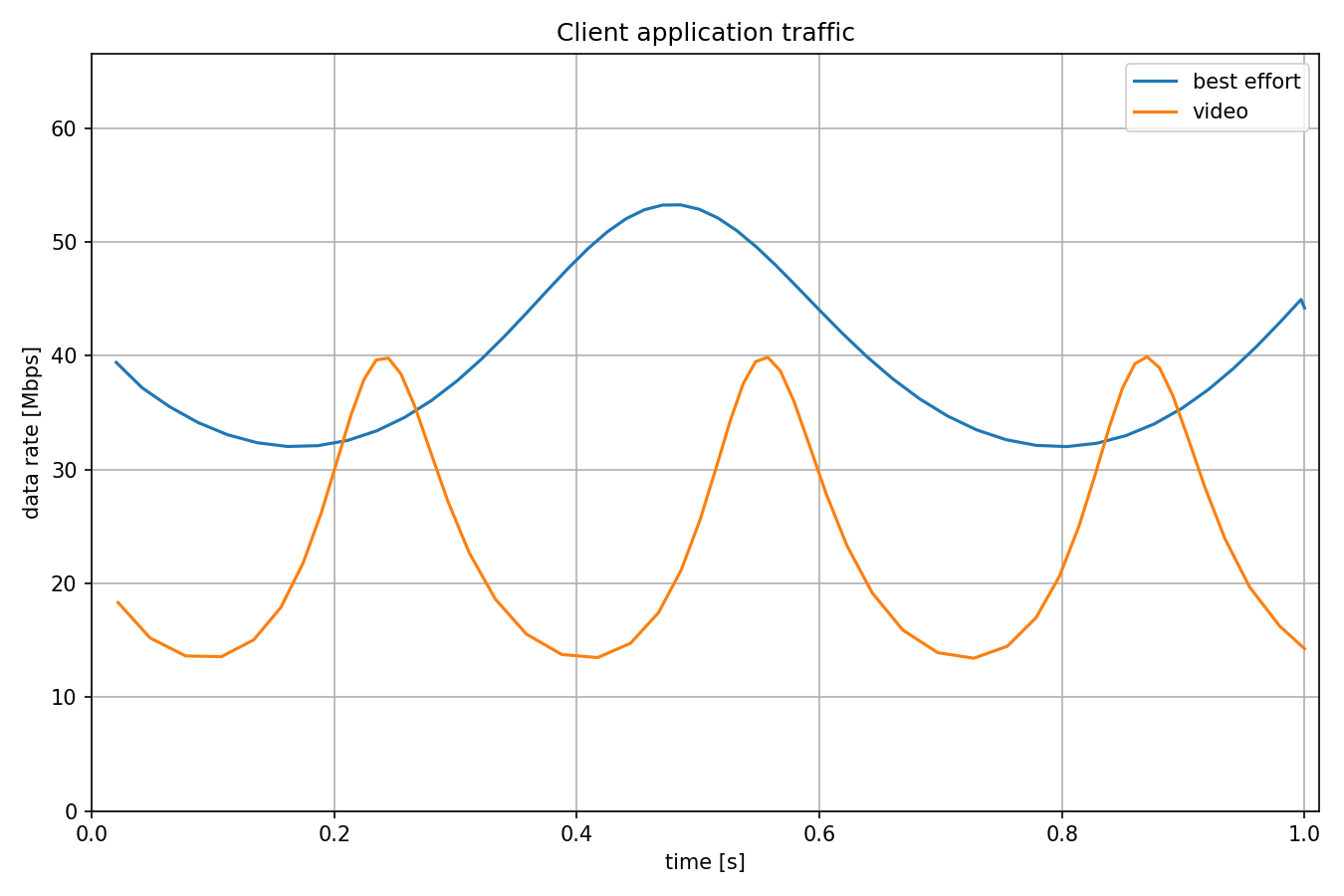

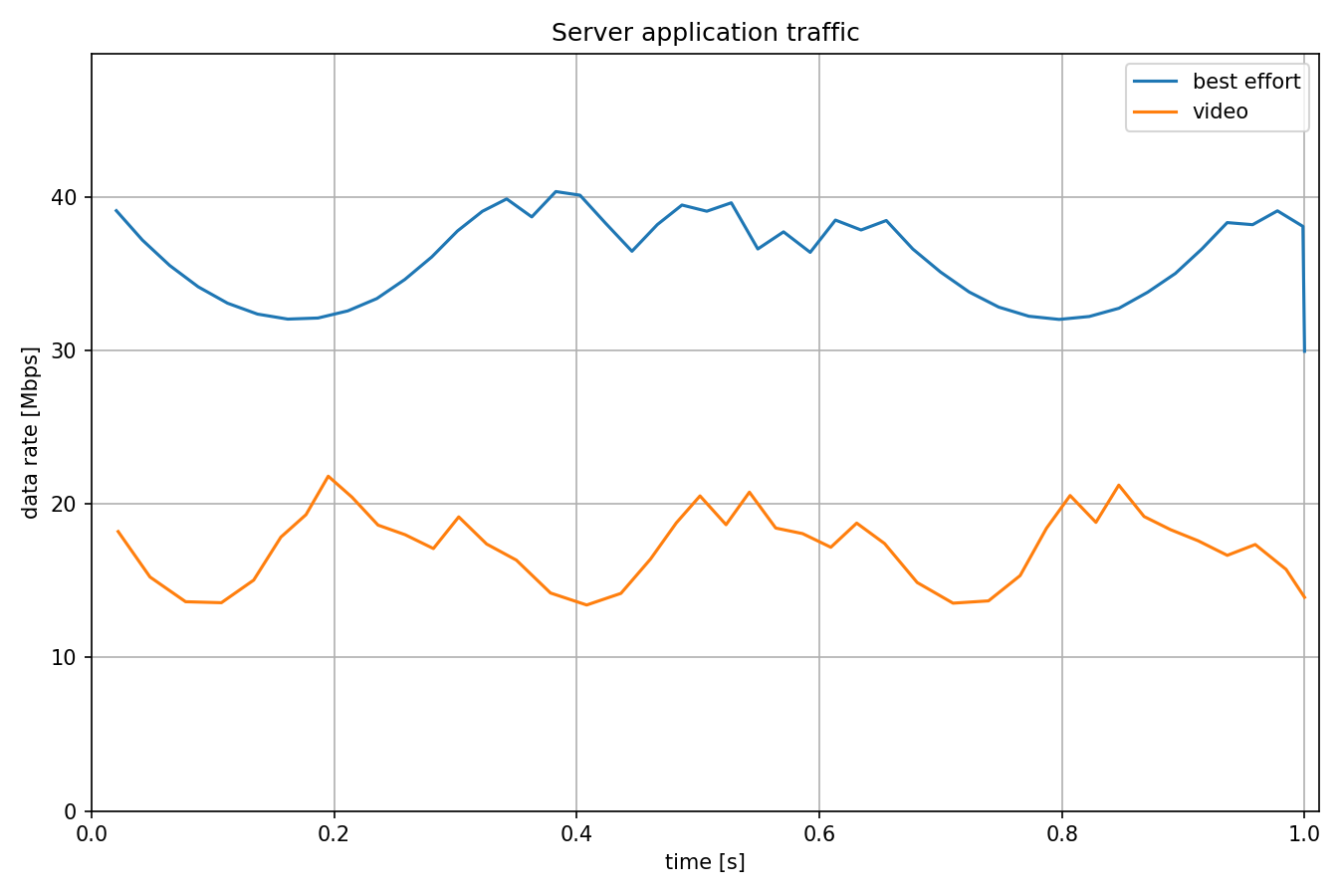

# best-effort stream ~40Mbps

*.client.app[0].source.packetLength = 1000B

*.client.app[0].source.productionInterval = 200us + replaceUnit(sin(dropUnit(simTime() * 10)), "ms") / 20

# video stream ~20Mbps

*.client.app[1].source.packetLength = 500B

*.client.app[1].source.productionInterval = 200us + replaceUnit(sin(dropUnit(simTime() * 20)), "ms") / 10

# enable outgoing streams

*.client.hasOutgoingStreams = true

# client stream identification

*.client.bridging.streamIdentifier.identifier.mapping = [{stream: "best effort", packetFilter: expr(udp.destPort == 1000)},

{stream: "video", packetFilter: expr(udp.destPort == 1001)}]

# client stream encoding

*.client.bridging.streamCoder.encoder.mapping = [{stream: "best effort", pcp: 0},

{stream: "video", pcp: 4}]

# server applications

*.server.numApps = 2

*.server.app[*].typename = "UdpSinkApp"

*.server.app[0].io.localPort = 1000

*.server.app[1].io.localPort = 1001

# enable per-stream filtering

*.switch.hasIngressTrafficFiltering = true

# disable forwarding IEEE 802.1Q C-Tag

*.switch.bridging.directionReverser.reverser.excludeEncapsulationProtocols = ["ieee8021qctag"]

# stream decoding

*.switch.bridging.streamCoder.decoder.mapping = [{pcp: 0, stream: "best effort"},

{pcp: 4, stream: "video"}]

# per-stream filtering

*.switch.bridging.streamFilter.ingress.numStreams = 2

*.switch.bridging.streamFilter.ingress.classifier.mapping = {"best effort": 0, "video": 1}

*.switch.bridging.streamFilter.ingress.meter[0].display-name = "best effort"

*.switch.bridging.streamFilter.ingress.meter[1].display-name = "video"

# per-stream filtering

*.switch*.bridging.streamFilter.ingress.meter[*].typename = "SlidingWindowRateMeter"

*.switch*.bridging.streamFilter.ingress.meter[*].timeWindow = 10ms

*.switch*.bridging.streamFilter.ingress.filter[*].typename = "StatisticalRateLimiter"

*.switch*.bridging.streamFilter.ingress.filter[0].maxDatarate = 40Mbps

*.switch*.bridging.streamFilter.ingress.filter[1].maxDatarate = 20Mbps

Try It Yourself¶

If you already have INET and OMNeT++ installed, start the IDE by typing

omnetpp, import the INET project into the IDE, then navigate to the

inet/showcases/tsn/streamfiltering/statistical folder in the Project Explorer. There, you can view

and edit the showcase files, run simulations, and analyze results.

Otherwise, there is an easy way to install INET and OMNeT++ using opp_env, and run the simulation interactively.

Ensure that opp_env is installed on your system, then execute:

$ opp_env run inet-4.4 --init -w inet-workspace --install --chdir \

-c 'cd inet-4.4.*/showcases/tsn/streamfiltering/statistical && inet'

This command creates an inet-workspace directory, installs the appropriate

versions of INET and OMNeT++ within it, and launches the inet command in the

showcase directory for interactive simulation.

Alternatively, for a more hands-on experience, you can first set up the workspace and then open an interactive shell:

$ opp_env install --init -w inet-workspace inet-4.4

$ cd inet-workspace

$ opp_env shell

Inside the shell, start the IDE by typing omnetpp, import the INET project,

then start exploring.