Asynchronous Shaping¶

Goals¶

In this example we demonstrate how to use the asynchronous traffic shaper.

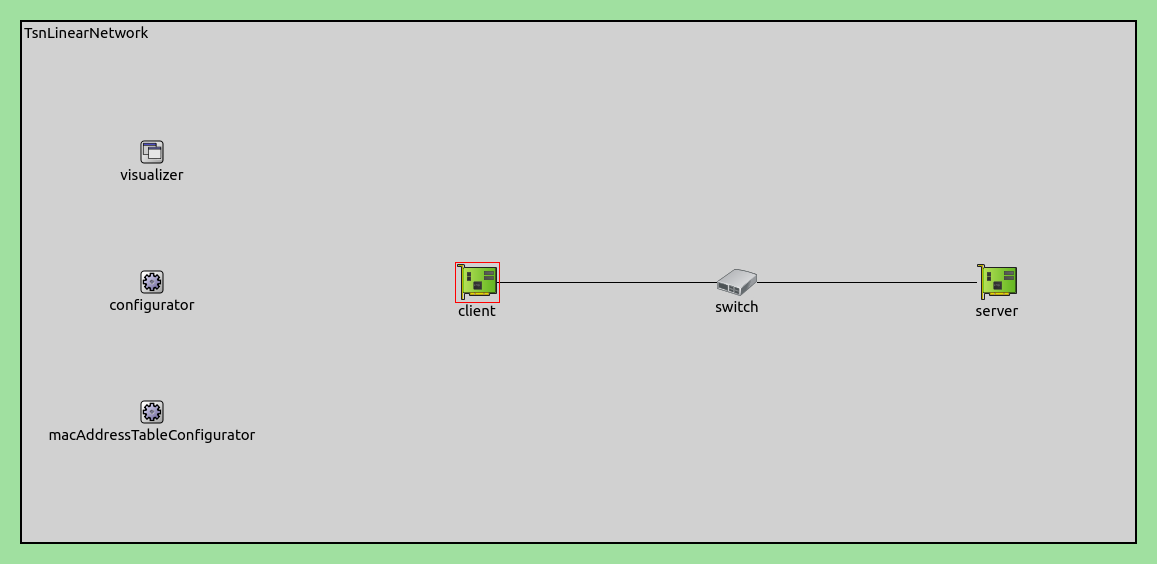

4.4The Model¶

There are three network nodes in the network. The client and the server are TsnDevice modules, and the switch is a TsnSwitch module. The links between them use 100 Mbps EthernetLink channels.

There are four applications in the network creating two independent data streams between the client and the server. The data rate of both streams are ~48 Mbps at the application level in the client.

# client applications

*.client.numApps = 2

*.client.app[*].typename = "UdpSourceApp"

*.client.app[0].display-name = "best effort"

*.client.app[1].display-name = "video"

*.client.app[*].io.destAddress = "server"

*.client.app[0].io.destPort = 1000

*.client.app[1].io.destPort = 1001

*.client.app[*].source.packetLength = 1000B

*.client.app[0].source.productionInterval = exponential(200us)

*.client.app[1].source.productionInterval = exponential(400us)

# server applications

*.server.numApps = 2

*.server.app[*].typename = "UdpSinkApp"

*.server.app[0].display-name = "best effort"

*.server.app[1].display-name = "video"

*.server.app[0].io.localPort = 1000

*.server.app[1].io.localPort = 1001

The two streams have two different traffic classes: best effort and video. The bridging layer identifies the outgoing packets by their UDP destination port. The client encodes and the switch decodes the streams using the IEEE 802.1Q PCP field.

# enable outgoing streams

*.client.hasOutgoingStreams = true

# client stream identification

*.client.bridging.streamIdentifier.identifier.mapping = [{stream: "best effort", packetFilter: expr(udp.destPort == 1000)},

{stream: "video", packetFilter: expr(udp.destPort == 1001)}]

# client stream encoding

*.client.bridging.streamCoder.encoder.mapping = [{stream: "best effort", pcp: 0},

{stream: "video", pcp: 4}]

# disable forwarding IEEE 802.1Q C-Tag

*.switch.bridging.directionReverser.reverser.excludeEncapsulationProtocols = ["ieee8021qctag"]

# switch stream decoding

*.switch.bridging.streamCoder.decoder.mapping = [{pcp: 0, stream: "best effort"},

{pcp: 4, stream: "video"}]

The asynchronous traffic shaper requires the transmission eligibility time for each packet to be already calculated by the ingress per-stream filtering.

# enable ingress per-stream filtering

*.switch.hasIngressTrafficFiltering = true

# per-stream filtering

*.switch.bridging.streamFilter.ingress.numStreams = 2

*.switch.bridging.streamFilter.ingress.classifier.mapping = {"best effort": 0, "video": 1}

*.switch.bridging.streamFilter.ingress.*[0].display-name = "best effort"

*.switch.bridging.streamFilter.ingress.*[1].display-name = "video"

*.switch.bridging.streamFilter.ingress.meter[*].typename = "EligibilityTimeMeter"

*.switch.bridging.streamFilter.ingress.meter[*].maxResidenceTime = 10ms

*.switch.bridging.streamFilter.ingress.meter[0].committedInformationRate = 41.68Mbps

*.switch.bridging.streamFilter.ingress.meter[0].committedBurstSize = 10 * (1000B + 28B)

*.switch.bridging.streamFilter.ingress.meter[1].committedInformationRate = 20.84Mbps

*.switch.bridging.streamFilter.ingress.meter[1].committedBurstSize = 5 * (1000B + 28B)

*.switch.bridging.streamFilter.ingress.filter[*].typename = "EligibilityTimeFilter"

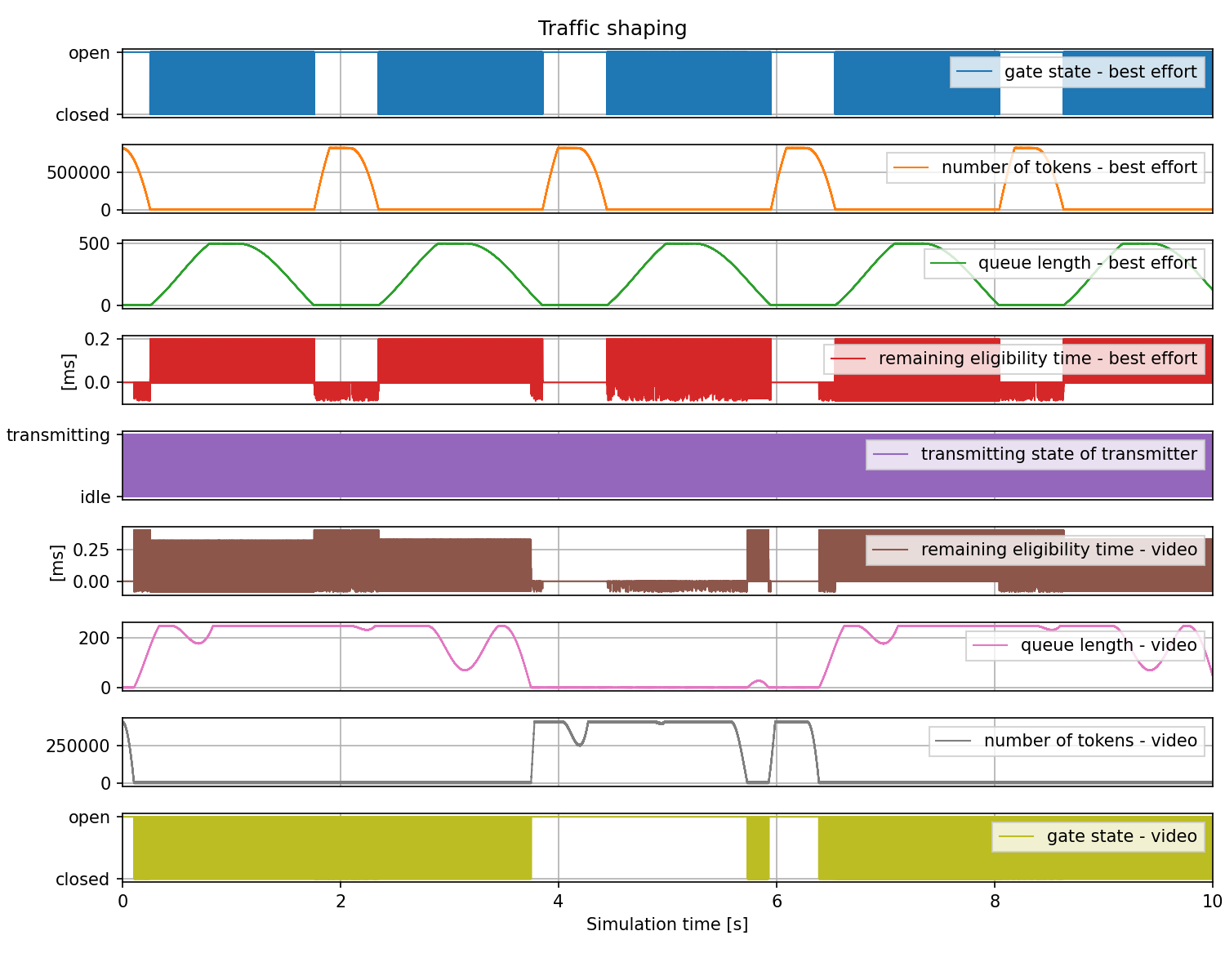

The traffic shaping takes place in the outgoing network interface of the switch where both streams pass through. The traffic shaper limits the data rate of the best effort stream to 40 Mbps and the data rate of the video stream to 20 Mbps. The excess traffic is stored in the MAC layer subqueues of the corresponding traffic class.

# enable egress traffic shaping

*.switch.hasEgressTrafficShaping = true

# asynchronous traffic shaping

*.switch.eth[*].macLayer.queue.numTrafficClasses = 2

*.switch.eth[*].macLayer.queue.*[0].display-name = "best effort"

*.switch.eth[*].macLayer.queue.*[1].display-name = "video"

*.switch.eth[*].macLayer.queue.queue[*].typename = "EligibilityTimeQueue"

*.switch.eth[*].macLayer.queue.transmissionSelectionAlgorithm[*].typename = "Ieee8021qAsynchronousShaper"

Results¶

The first diagram shows the data rate of the application level outgoing traffic in the client. The data rate varies randomly over time for both traffic classes but the averages are the same.

The next diagram shows the data rate of the incoming traffic of the traffic shapers. This data rate is measured inside the outgoing network interface of the switch. This diagram is somewhat different from the previous one because the traffic is already in the switch, and also because it is measured at a different protocol level.

The next diagram shows the data rate of the already shaped outgoing traffic of the traffic shapers. This data rate is still measured inside the outgoing network interface of the switch but at a different location. As it is quite apparent, the randomly varying data rate of the incoming traffic is already transformed here into a quite stable data rate.

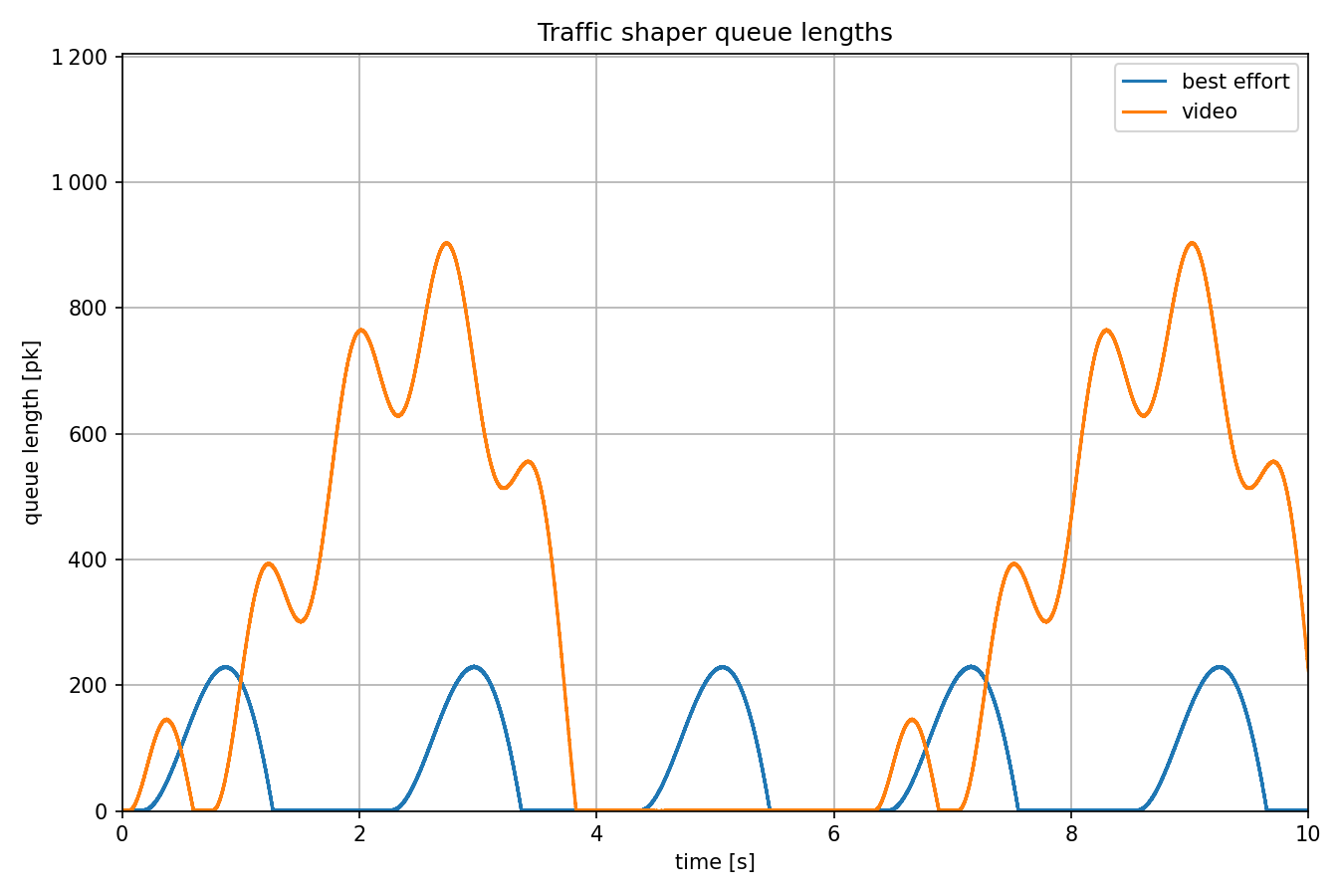

The next diagram shows the queue lengths of the traffic classes in the outgoing network interface of the switch. The queue lengths increase over time because the data rate of the incoming traffic of the traffic shapers is greater than the data rate of the outgoing traffic, and packets are not dropped.

TODO

TODO

TODO

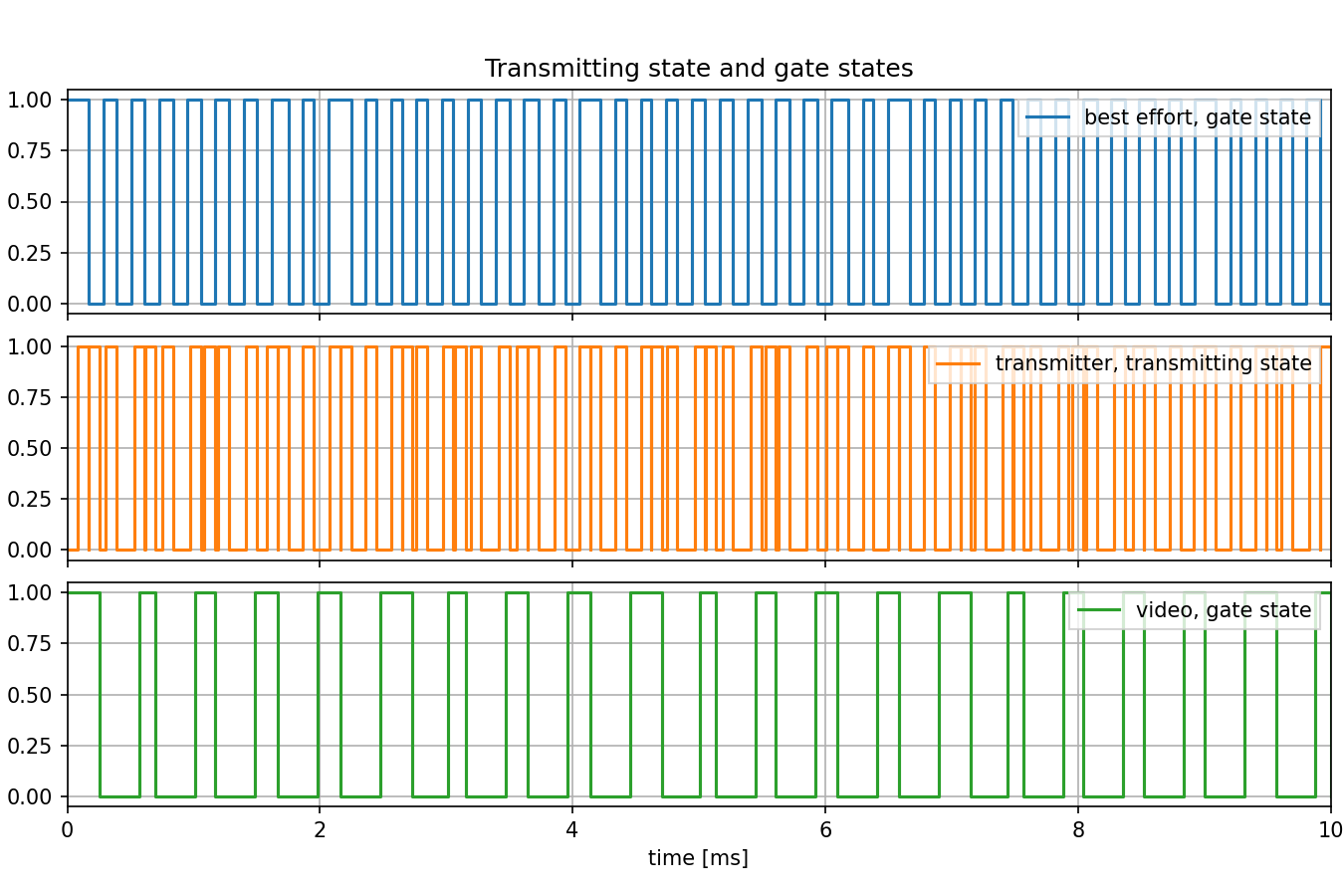

The next diagram shows the relationships (for both traffic classes) between the gate state of the transmission gates and the transmitting state of the outgoing network interface.

The last diagram shows the data rate of the application level incoming traffic in the server. The data rate is somewhat lower than the data rate of the outgoing traffic of the corresponding traffic shaper. The reason is that they are measured at different protocol layers.

Sources: omnetpp.ini

Try It Yourself¶

If you already have INET and OMNeT++ installed, start the IDE by typing

omnetpp, import the INET project into the IDE, then navigate to the

inet/showcases/tsn/trafficshaping/asynchronousshaper folder in the Project Explorer. There, you can view

and edit the showcase files, run simulations, and analyze results.

Otherwise, there is an easy way to install INET and OMNeT++ using opp_env, and run the simulation interactively.

Ensure that opp_env is installed on your system, then execute:

$ opp_env run inet-4.4 --init -w inet-workspace --install --chdir \

-c 'cd inet-4.4.*/showcases/tsn/trafficshaping/asynchronousshaper && inet'

This command creates an inet-workspace directory, installs the appropriate

versions of INET and OMNeT++ within it, and launches the inet command in the

showcase directory for interactive simulation.

Alternatively, for a more hands-on experience, you can first set up the workspace and then open an interactive shell:

$ opp_env install --init -w inet-workspace inet-4.4

$ cd inet-workspace

$ opp_env shell

Inside the shell, start the IDE by typing omnetpp, import the INET project,

then start exploring.